2025 Predictions: A Framework for the Future of AI Agents

The next wave of enterprise software won't look like the last. Instead of offering a buffet of 2025 predictions, I'm prepared to go deep on an important one: AI Agents. This will soon fundamentally shift how work gets done.

I'll cover some foundational assumptions, the anatomy of AI agents, a framework to think about the types of agents that emerge, and ultimately outline the technical and operational hurdles standing between today's capabilities and a possible future of highly capable and aligned agents.

A disclaimer: diving deep means I could be wrong about the details. The terms I use may become outdated quickly. Yet I hope a more detailed analysis creates a better blueprint for building intentionally toward our future.

I. Setting the Stage

Before exploring the promise of AI agents, let me outline three foundational beliefs:

1. LLM capability is increasing at or near an exponential rate

The progression in LLM capabilities has been staggering. Consider Anthropic's recent upgrade from Claude 3 to 3.5 Sonnet: in mere months, we saw a step-function improvement in the model's ability to solve complex engineering problems.

The trend is clear: GPT2 in 2019 had an 80% accuracy counting to 10, GPT3 in 2020 had an 80% accuracy in summing three digit numbers, and GPT4 in 2023 passed the California Bar exam. Each 100x increase in compute has unlocked dramatically new capabilities.

2. We should expect scaling laws to continue

Skeptics cite limited data, compute constraints, and other hurdles as barriers to continued scaling. Yet while human-level intelligence once seemed unimaginable, we've already witnessed machines pass the Turing Test in our lifetime. Betting against continued AI advancement means ignoring the evidence before us - our intuitions have consistently underestimated the pace of progress.

3. It is hard to process what this velocity means

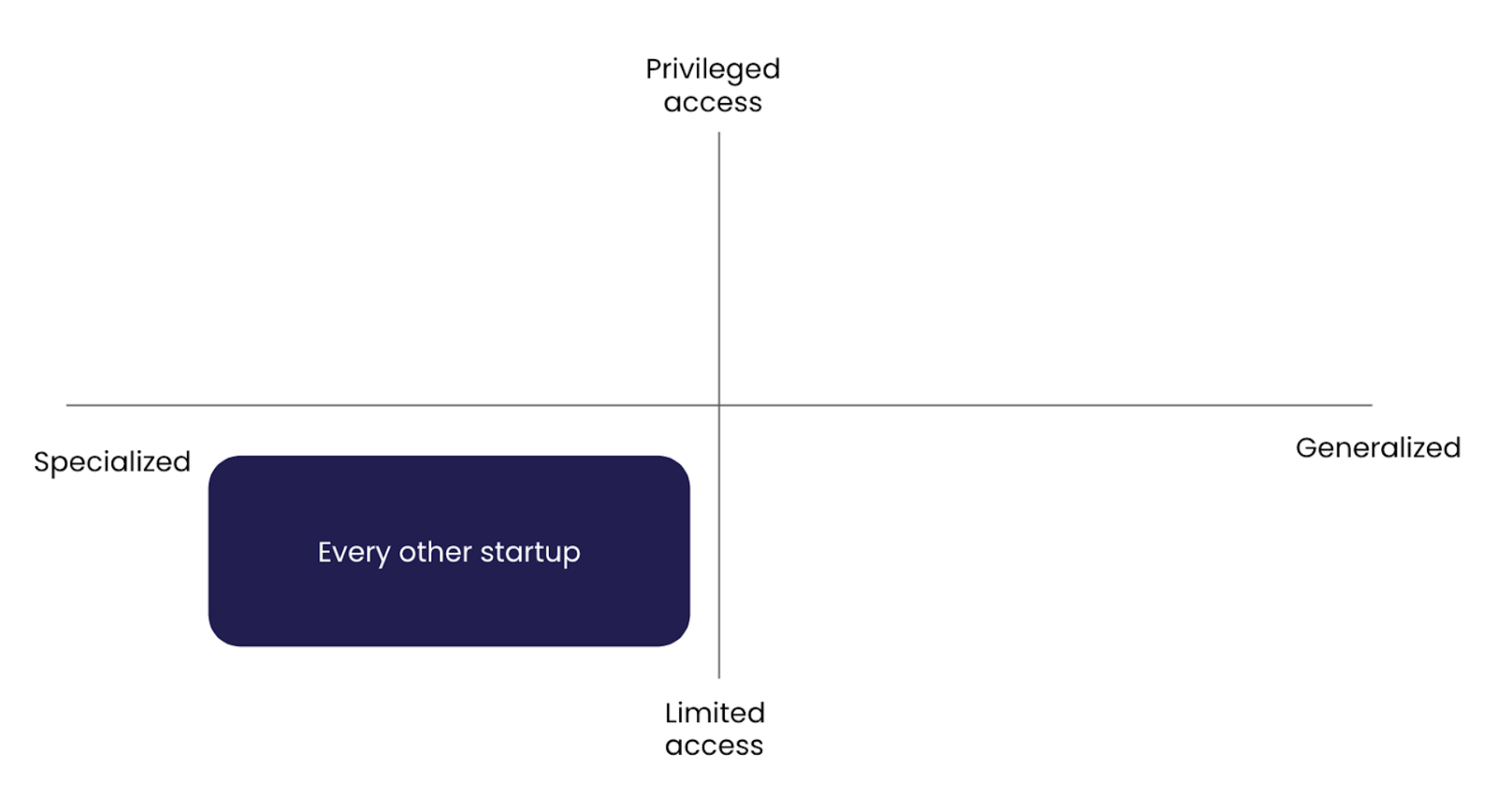

This unprecedented rate of progress creates a cognitive blind spot. Many AI agent startups are focusing on narrow verticals without anticipating what the next generation of LLMs will enable. I explore the implications of this framework in III. Types of AI Agents section.

II. Anatomy of AI Agents

To cut through the noise surrounding AI agents, simply imagine additional users executing tasks on your behalf. These agents are likely to manifest in one of two ways: either impersonating the user directly (sending emails as "Michelle") or operating transparently as an identified agent ("agent of Michelle").

But what makes an agent truly capable of autonomous action? Venture capitalists are converging around four core components working in concert:

- Reasoning engine (LLM) - connectivity to a frontier model provider

- External memory - a database

- Execution capability - access to tools

- Planning mechanism - a scratchpad to self-play

You'll notice that many of these components feel commodity-like - many available through an API call, off-the-shelf database, a common architecture like RAG. Where true differentiation likely lies is in (3) the execution capability. I'd take this ingredient one step further, and break execution down into:

The importance of execution leads to my first prediction: the prowess of the AI agent will be largely determined by who owns the tools that agents use. In investor lingo, this is the system of engagement where actions are executed. The AI agents closest to the system of engagement and their proprietary (i) workflow context, (ii) data, and (iii) ability to perform actions, will win.

III. A Framework for Types of AI Agents

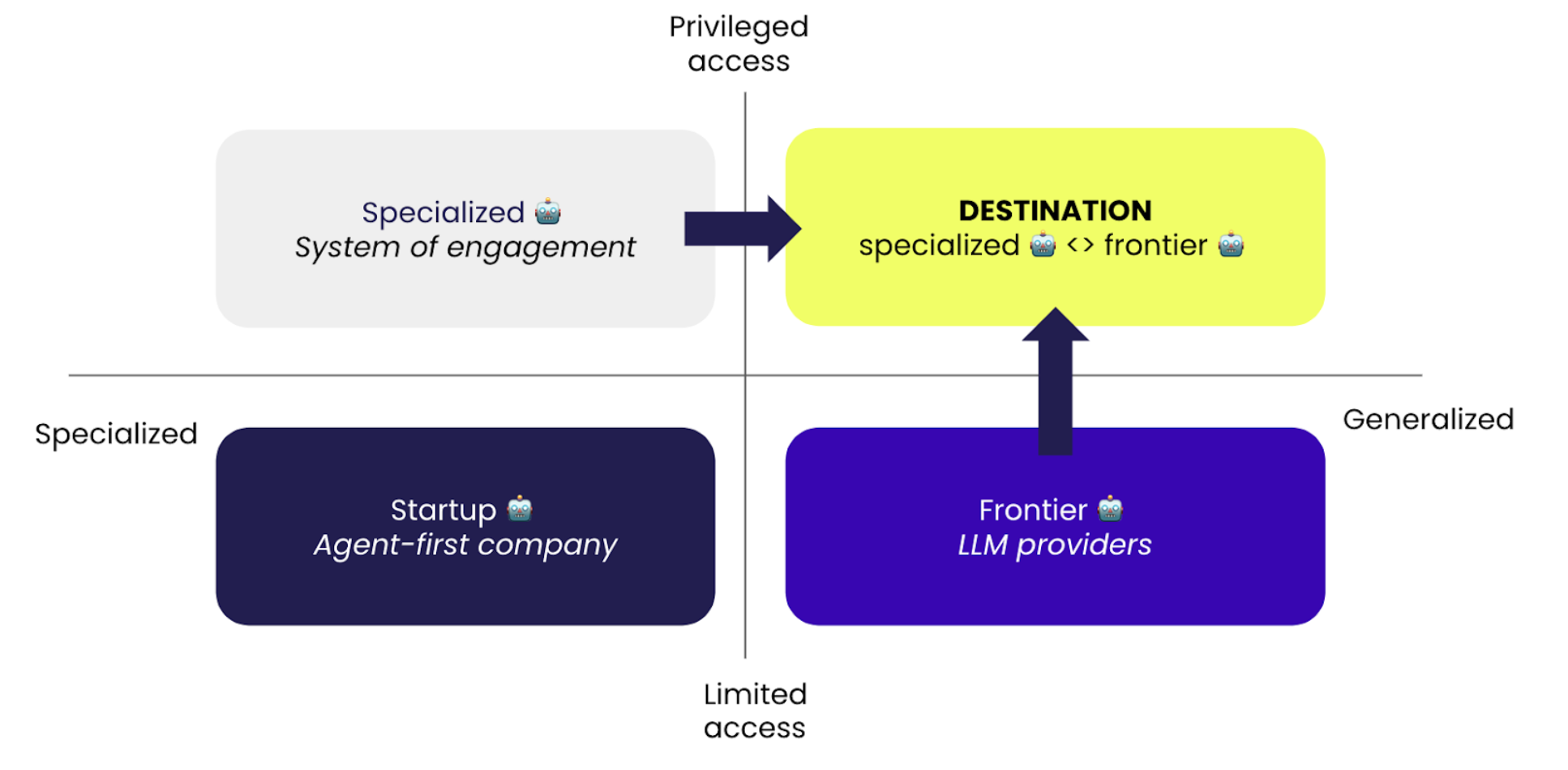

Given the importance of execution, existing market maps for AI agents are missing one key axis: Access.

Thus, the vertical axis - access - is key to determining what an agent can actually do. This ranges from limited access (public APIs, browsing the web, and user credentials) to privileged access (private APIs and system-level permissions).

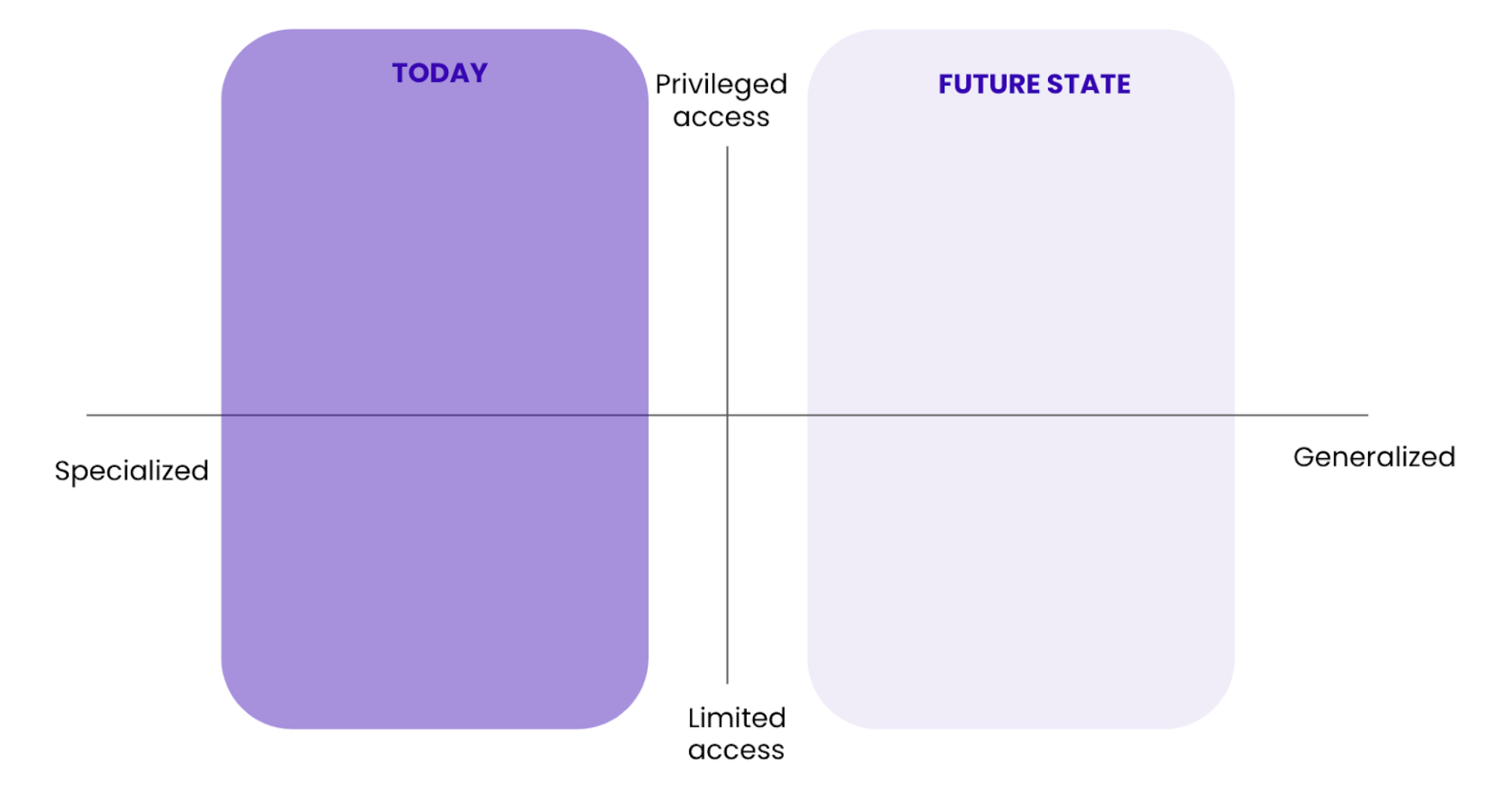

Privileged access incorporates both superior aggregation of credentials, but also access to superadmin or private APIs owned by the tool that the agent is accessing. Software companies often limit what external users can access. I remember many requests to access Airtable's private metadata API back in the day. Airtable is not alone - Meta, Twitter and Linkedin are examples of those that have strategically limited what data external parties can access via API.

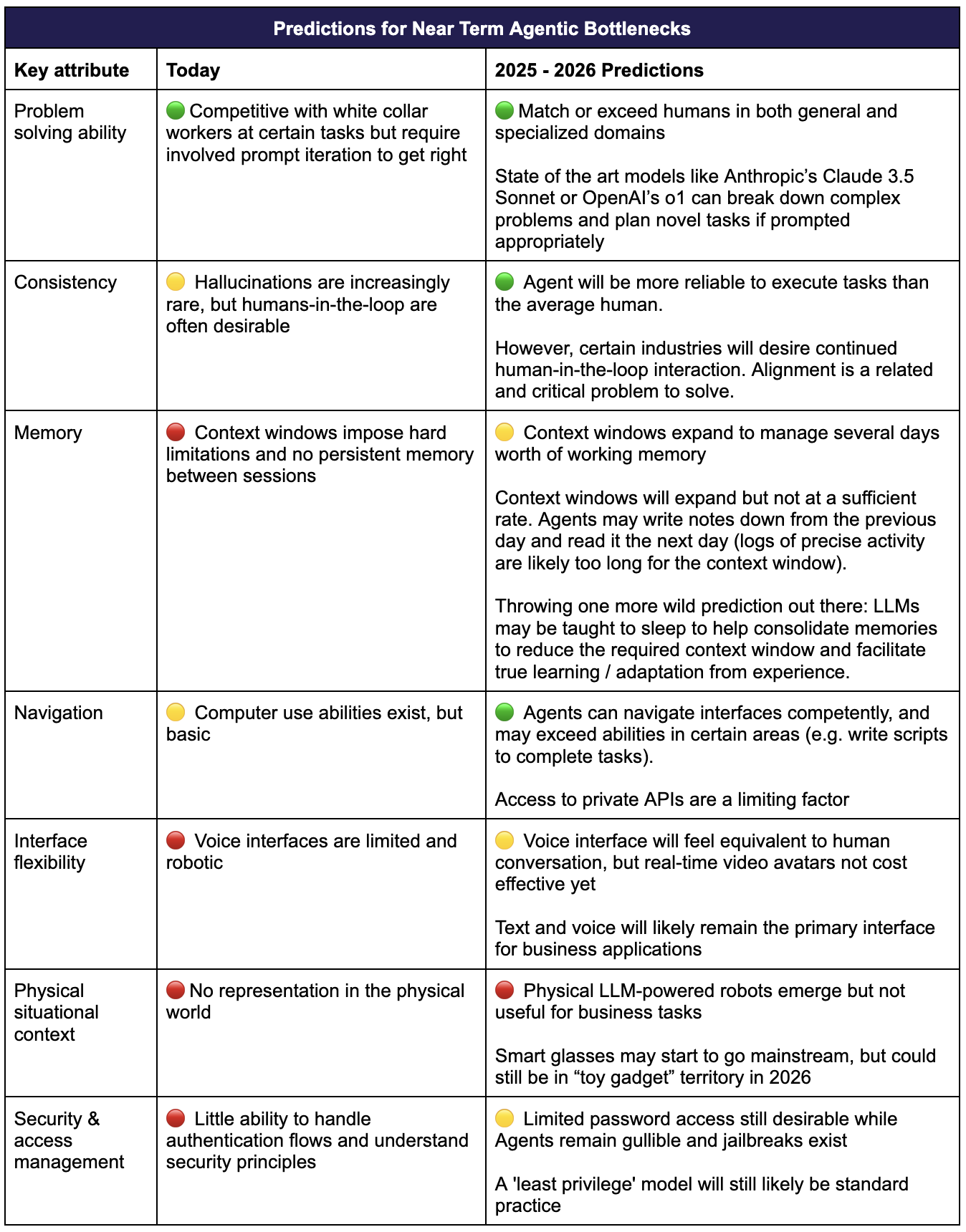

Today, AI agents are basic in their ability to handle authentication flows and understanding of security principles. In the future, I suspect it would still be optimal to limit password access, just like you wouldn't share all passwords with a single employee, or sometimes want to add friction in logging into your bank account. Security will mirror a 'least privilege' model similar to what we have today.

The horizontal axis is more common across market maps: specialized vs generalized. Most investors today are focused on the vertical application of AI agents. This makes sense, given that the reasoning engines (LLMs) are still in their infancy, require more fine tuning, and are less effective at generalized tasks.

However, I notice founders and investors alike dismiss the possibility of highly intelligent generalized agents, as if it were a foregone conclusion. True, a model might not always have the full context of analog interactions. And vertical SaaS has historically been a safe bet. But one can imagine ways it can get pretty close, plus have a superior memory to ourselves. My sense is that a better way to think about the X-axis is investing in what is possible today, vs seeing what is possible in the future.

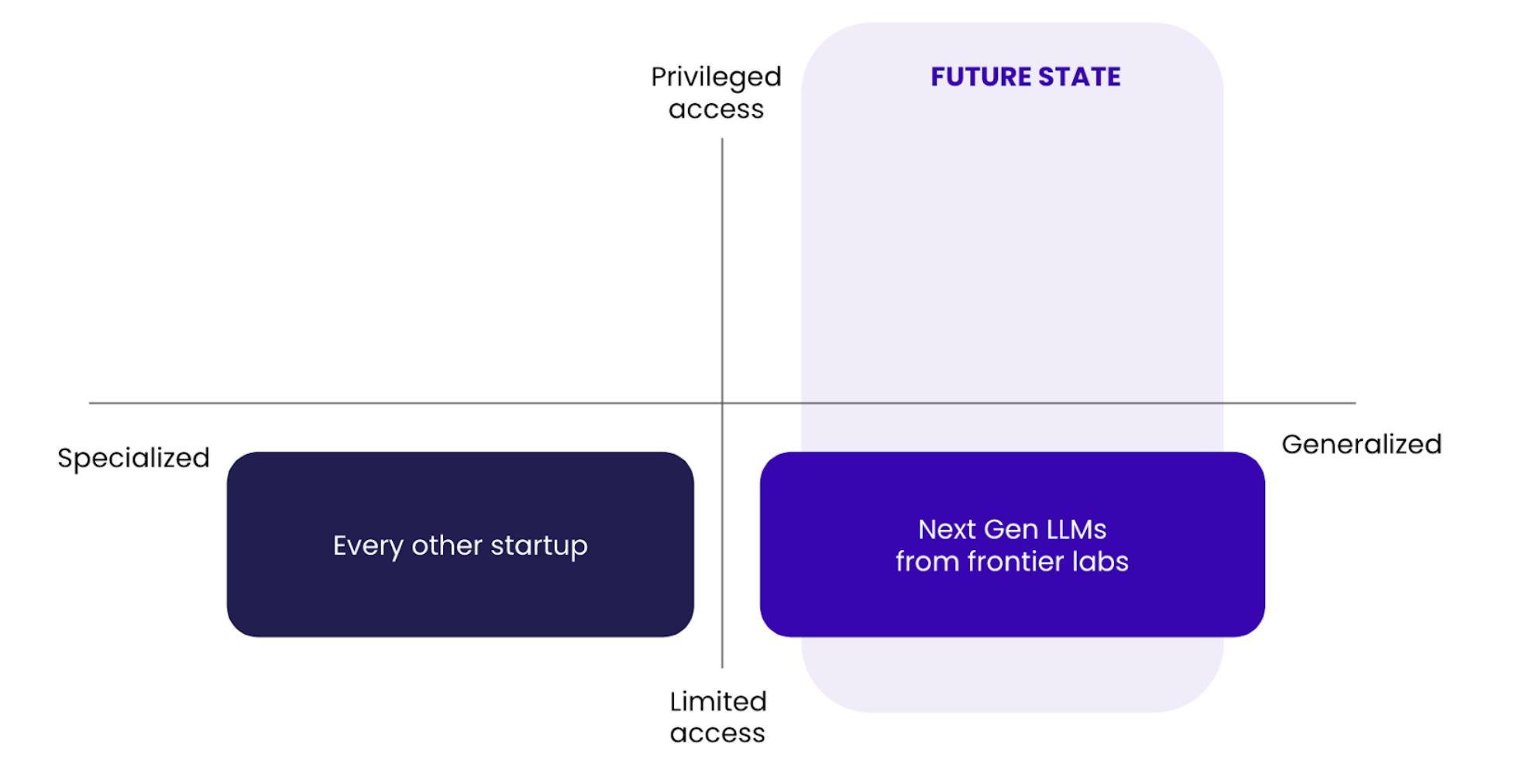

My second prediction is about generalized AI agents: they are indeed coming, and their timing depends on future LLM capabilities. This creates a strategic challenge for specialized agent startups. The execution ability of an AI agent depends on access to best-in-class systems of engagement (like Salesforce is for CRM) - meaning startups must first build a competitive core product. Building AI agents is a privilege reserved for companies that can deliver a 10x better product than the competition. The opportunity lies in finding either industries with outdated systems of engagement or where incumbents are too slow to innovate. These rare startup opportunities exist, and they're the ones to watch.

While the market is early, we are starting to see incumbents announce agentic products. One example is Salesforce's Agentforce, where the CRM is the system of engagement with the promise of a highly capable agentic layer to assist with execution in the future. You could imagine other platforms from ERPs to Marketing Automation software building their own dedicated agent with privileged access, where they not only can access the platform like a user but - as LLMs get smarter and more trustworthy - access private APIs that make them more efficient and effective in the future.

Eventually, agents will need to work together. For example, specialized AI agents with privileged access will need to work in concert with other specialized and / or generalized agents who may have access to other tools to perform tasks.

The importance of access to the full power of a tool ultimately begs the question: Is the more appropriate framing of "System of engagement" actually the "System of execution"? In the next section, we'll explore the limits to execution beyond that of a single tool and look at what could impact agentic development in 2025.

IV. Bottlenecks for the Brave New World

Now that we have a framework for the types of agents that could emerge, what technical and operational hurdles stand between today's capabilities and this brave new world of highly capable, aligned agents?

While we can extrapolate from existing trends, technology is evolving rapidly and no one can predict exactly where agents will take us. In the table below, I've distilled what I believe are the key attributes - and bottlenecks - that will shape agent development in the next two years. This felt like a reasonable timeframe to allow for a major advance in LLMs, and for the agentic market to adapt.

These bottlenecks fall into two major categories: technical limitations (like memory) and operational challenges (like security). The colored circles indicate where I believe the bottlenecks lie in capability in the near term. This should help inform how we build in 2025. Let's examine each in detail.

The next five years will indeed be different - but not just because of AI advances. The true differentiator will be how we architect systems to harness agent capabilities. For startups, this means building the right thing, at the right time, in the right strategic position. While conventional wisdom holds that being early is the same as being wrong, the accelerating pace of AI creates an even greater risk: building for today's capabilities without anticipating tomorrow's. Success requires understanding not just initial market timing, but market velocity.

At Anrok, we're proud to be the trusted partner to leading innovators in this digital economy. We're excited to see what you build next.